Reading Ambitiously 6-27-25

Software 3.0, Infrastructure > Apps > Infrastructure, LLMs as OS, Agentforce 3.0, OpenAI drama, Andy Jassy on GenAI, Coatue EMW, Going the Extra Mile & Hustle

Programming Note: Reading Ambitiously will be off to celebrate Independence Day 🇺🇸. We’ll return on July 11th!

Enjoy this week’s Reading Ambitiously Big Idea read by me!

The big idea: the dawn of software 3.0

The art of the possible

When I started my career at IBM, we were building the Business Analytics portfolio—Cognos, SPSS, early tools for statistical prediction, modeling, and forecasting. We traveled the globe, meeting with companies about how IBM Watson could enable their businesses with AI. The potential felt limitless, especially after Watson won Jeopardy.

But we kept hitting the same wall: we never had the data, or if we did, it was fragmented, unlabeled, and inconsistent. The models could do amazing things, but only with clean inputs. Most organizations weren't there yet.

Every conversation got stuck in the "art of the possible." We could theorize endlessly, but without quality data, we couldn't make it real. Real intelligence was locked away—accessible only to those with perfect data and specialized expertise.

Building the foundation

Attempts to implement advanced analytics and early versions of ML created awareness. Organizations woke up to their data's value. "Data is the new oil" became the rallying cry at the C-level, but first, they had to organize it.

The next decade was about building the foundation. Snowflake, Databricks, and a generation of infrastructure companies emerged to help organizations transform fragmented data into usable information. If you didn’t build a data warehouse, you likely built a SQL-based datamart to “stitch it all together.”

The foundation was built—and it sort of worked. The data was unlocked. Companies got better reporting, better business intelligence, and better insights from their information. But game-changing artificial intelligence was still a dream.

The hero emerges

Then something remarkable happened. We invented Large Language Models, or LLMs. These LLMs are changing software, and it’s happening at a pace unlike we’ve ever seen before.

Andrej Karpathy called this "Software 3.0" at Y Combinator's recent AI Demo Day, and it represents something fundamentally different from everything that came before. If you have 30 minutes this week, I highly recommend watching his presentation (it already has 1M+ views).

In Software 1.0, we wrote logic by hand. If you wanted a feature, you coded it. Programming required years of training.

In Software 2.0, we trained models to find patterns. But this required massive labeled datasets and specialized infrastructure that most companies didn't have. Artificial Intelligence was still unachievable for most.

In Software 3.0, you interact with pre-trained models using natural language. Your program is the prompt. The model already knows how to reason—you just need to connect it to your data and systems. Anyone who can speak can program.

This is the democratization of intelligence—not just access to smart tools but the ability for anyone to create, automate, and solve problems by simply describing what they want in English.

Wow!

Technology adoption is reversed this go around

For the first time in a technology revolution, the consumer, not governments or enterprises, is empowered first.

Why? Today, LLMs are trained on the organized internet—Common Crawl, GitHub, Wikipedia, academic papers–essentially a .zip file of the web. They have shown us what is possible when AI finally has access to comprehensive, structured data. ChatGPT feels like magic because the model already has what it needs to be useful in our consumer lives, and many of us use it like a better version of Google.

But this is bigger than just better search results. For the first time in computing history, English became a programming language. The most natural interface humans have—conversation—became the way we interact with these new systems.

Software 3.0 systems are starting to behave more like operating systems than search engines.

The LLM acts as the CPU, the context window functions as RAM, and prompts become system calls. These systems orchestrate memory and compute for problem solving, managing input and output across different tools and modalities.

We're in what Andrej calls the "1960s era" of this new computing paradigm. LLM compute is expensive and centralized in the cloud, forcing us into a timesharing model where we're all thin clients interacting over the network. The personal computing revolution for AI hasn't happened yet—but it's coming. Perhaps this brings even more clarity to why Sam Altman and Jony Ive are teaming up – the “PC moment” hasn’t happened yet.

Consumers first, enterprises next

For Software 3.0 to reach its full potential in the enterprise, we need to solve some fascinating puzzles. These aren't obstacles—they're opportunities for builders who see what's coming.

The infrastructure opportunity is massive: Every company we talk to is deploying AI, but they're discovering they need entirely new tools to do it efficiently. The models are just the tip of the iceberg.

Underneath, there's an entire stack of problems that need solving: orchestration for complex workflows, observability for AI systems, data labeling, security and access controls, data warehouse integrations, role-based permissions, auditability, latency optimization, and dozens of other challenges that now need to be reimagined for AI systems.

USV observed that in a technology revolution, new infrastructure inspires applications (i.e. LLMs inspired ChatGPT) and then applications inspire additional infrastructure (i.e. the iPhone brought GPS, 5G and an app store that set the stage for Uber).

If you’re a builder, there are a ton of capabilities underneath the iceberg that will lead to an entirely new wave of infrastructure companies that rise up to meet the challenge of building AI-native products. Said another way, AWS is here, who's going to start DataDog? Many of you have already begun working on these opportunities.

We need new design patterns. How do humans and AI systems work together most effectively? There isn’t really an agreed-upon “GUI” yet. We’ve talked about the importance of voice this year, which has emerged as an excellent way to interact with AI. There will be other new and important patterns that designers create to unlock AI’s full potential.

Karpathy introduced the concept of the "autonomy slider"—the idea that we should give users control over how much autonomy they give the AI. Take Cursor, the AI code editor:

Tab: Simple autocomplete 1 line of code (minimal autonomy)

Cmd+K: Rewrite a selected block (moderate autonomy)

Cmd+I: Edit across multiple files (higher autonomy)

Each level gives the model more control while maintaining human oversight through visual diffs and accept/reject mechanisms. We all want fully autonomous agents, but a stepping stone will be about designing for partial autonomy products that let humans and AI work together effectively. This will allow users to build trust with the system.

But the autonomy slider is just one example. We're still in the early days of figuring out how to design for this new paradigm. There are no standard patterns yet, no settled expectations.

Why this matters now

Because this is the first time in my personal experience we have the data and we have the models.

For most of my career, the world has globalized things, managed things, focused on optimizing and driving costs down, we’ve done a lot of 1 to 2. But this feels like a really good time to build things, make things, to be innovative and go from 0 to 1. I’m not the only one that feels this way, in a recent Marc Andreesen interview with Jack Altman, he shared that a16z is betting on a total rewrite.

The emergence of Software 3.0—a paradigm where intelligence is democratized, where English becomes the programming language, the data is more available than ever, where anyone can automate and create simply by describing what they want is what makes it possible.

We're in the early chapters of this story. The hero is emerging, showing us glimpses of what's possible. The foundation is more ready than ever. The opportunities seem enormous.

If you have an entrepreneurial mindset, now is a great time to be a builder. To play for the upside vs. protect the downside.

The question isn't whether Software 3.0 and AI will transform how we work, that feels inevitable at this point. It’s how you plan to make your mark!

Best of the rest:

🤖 Salesforce doubles down on AI agent control — Agentforce 3 introduces a Command Center for full observability, plug-and-play interoperability via MCP, and 100+ prebuilt actions to help enterprises monitor, evolve, and scale their digital workforce with confidence. — Salesforce

⚔️ ChatGPT’s enterprise success fuels OpenAI–Microsoft rivalry — Microsoft is struggling to sell Copilot to corporations as employees increasingly prefer using ChatGPT, turning a strategic partnership into a high-stakes battle for AI dominance at work. — Bloomberg

🤖 Amazon’s AI Future Is Agentic — CEO Andy Jassy outlines how Generative AI is reshaping everything from Alexa to inventory forecasting, and why the next wave of innovation will come from intelligent agents working across every corner of the company. — Amazon

Charts that caught my eye:

→ Why does it matter? AI supercomputers are scaling faster than Moore’s Law—and shifting into private hands. Performance at the frontier is growing 2.5x per year, but so are the costs: each new generation doubles in power demand and hardware expense. If current trends hold, training the top model in 2030 could require as much energy as a small city and cost hundreds of billions. At the same time, industry control has grown from 40% to 80% of total compute since 2019, with the U.S. commanding three-quarters of global capacity.

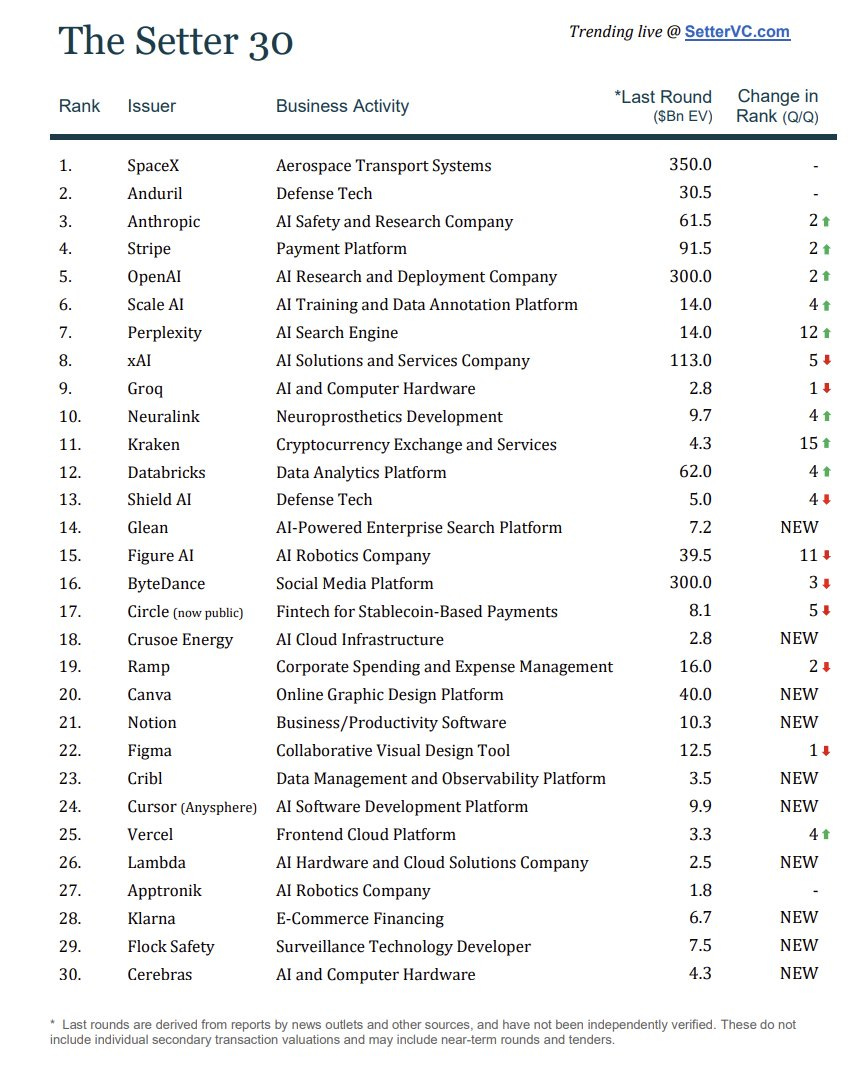

→ Why does it matter? The 30 most in-demand startup secondary shares as Q2 '25. Interesting to note the M&A activity going on here too. #6 is ScaleAI where Meta just announced a 49% ownership position.

Tweets that stopped my scroll:

→ Why does it matter? Over the weekend, OpenAI was forced to take down the announcement about the acquisition of Jony Ive’s company. Turns out there is quite a story behind the scenes which OpenAI has called “baseless”. Since the story hit, Sam Altman has publicly shared emails from the Founder & CEO at IYO. A nice reminder to never write emails you don’t want the world to read.

→ Why does it matter? Karpathy is credited with coining “vibe coding” perhaps he and Tobi will help evolve prompt engineering to context engineering.

Worth a watch or listen at 1x:

→ Why does it matter? If you haven’t dug into the Coatue EMW 2025 keynote, you really should. Link is here. The BG2 crew did a great job at unpacking some of their favorite key insights with the Coatue team. In 1-hour you’ll have a complete understanding of how Coatue sees the global investment opportunity across public and private markets.

→ Why does it matter? JP Morgan is spending $2B/year on AI and the person in charge now works directly for Jamie! “Every time we meet at every detailed meeting, we have people saying ‘What can I AI do to make it better?’. You just have to love how down to earth Jamie is in an interview like this.

→ Why does it matter? A quick listen packed with timeless insights from Ken’s experience building Citadel. I particularly enjoyed his points around minute 13 where he talks about “going the extra mile.”

Quotes & eyewash:

“Things may come to those who wait, but only the things left by those who hustle.”

Abraham Lincoln

→ Why does it matter? I like the You All Better Read This headline. Have to drink your own champagne!

→ Why does it matter? The AI generated “vlogs” of key moments in history are hilarious! Have a great fourth of July everyone! 🇺🇸

The mission:

The Wall Street Journal once used ‘Read Ambitiously’ as a slogan, but it became a challenge I took to heart. If that old slogan still speaks to you, this weekly curated newsletter is for you. Every week, I will summarize the most important and impactful headlines across technology, finance, AI and enterprise SaaS. Together, we can read with an intent to grow, always be learning, and refine our lens to spot the best opportunities. As Jamie Dimon says, “Great leaders are readers.”

I really like the viewpoint and focus on consumers. Something I've been doing lately is making up for gaps in products with things that I make myself (i.e., a Facebook marketplace poster, a meta-knowledge app that handles things a little better than Notion, Evernote, etc.). The goal isn't to monetize these things, it's to fill needs that existing solutions skip. Because the cost of a vibe coding stack (Lovable + Cursor + Supabase in my case) is so affordable, it's actually cheaper than paying subscription fees to the more generic apps (Evernote, Notion, etc.) and it's built around my workflow by design.