Reading Ambitiously 6-13-25

memory and context, idea resistance, forward deployed engineers, openai at $10b, US IPO return, mag 7 dislocation, meta talent wars, khosla on pitches, king’s mindset, apple glass.

*NEW* The big idea read to you this week by me!

The big idea: memory and context are going to create big moats and big conversations about data

Since the earliest days of enterprise SaaS, one foundational question has always mattered:

If you generate the data, who owns it?

For most of the last two decades, the answer was straightforward: the customer. That clarity defined how software companies were built. Applications like Salesforce and NetSuite stored your records—but you controlled the data.

During these decades, we entered the engagement and business intelligence era. Organizations began hiring Chief Data Officers. The mandate was clear: get the data out. Out of black boxes. Out of structured databases where it was trapped behind forms and dashboards. We built entire tooling ecosystems around this goal—APIs, SDKs, ETL pipelines, reverse ETL platforms. It became table stakes for SaaS companies to support clean, programmatic access to their customers’ own data.

And in many cases, vendors monetized that access—charging for exports, metering API usage, launching their own BI/Analytics capabilities or packaging data rights into premium products.

Platforms like Snowflake, Databricks, and ServiceNow became essential destinations in this movement—helping organizations unify data across functions, clean it, query it, and feed it into dashboards and forecasts built with tools like Microsoft PowerBI.

The data warehouse became a big source of insight. The CEO of one application company who lacked native business intelligence capabilities once told me: “For every $1 of accurate accounting we sold, we lost $5 to someone who got the data out into better reporting.”

But… it was still mostly backward-looking. It told us what happened.

Now we’ve entered a new phase.

AI systems aren’t being built just to analyze data. We’re designing them to learn from it. To build intelligence on top of it. To act on our behalf—proactively, autonomously, and contextually.

And that brings us back to the original question: If an AI learns from your data… who owns what it becomes?

That’s what made last week’s Slack (owned by SalesForce.com) controversy such a hot topic in tech circles.

Slack acknowledged it had been using customer messages and files to train its machine learning models. Not LLMs—yet—but internal systems like search ranking and channel recommendations. Crucially, the default setting was for customers to opt-out of Slack’s ability to do this. Some customers had no idea their data was being used to improve Slack’s products—until a footnote in the documentation blew up online (read your contracts… the enterprise customers certainly knew about this).

Simultaneously, it was reported that SalesForce.com has been making moves to restrict data from third party AI applications like Glean. CEO, Marc Benioff has said customer data in his applications is valuable in the AI era.

All this backlash isn’t about emojis or search ranking. It’s about the feeling that someone else's system is getting smarter at your expense. It is about control.

But at the same time, you’ll want control, you’re going to want your vendors to unlock the full potential of AI for you. And you’re going to want to bring your data into those capabilities—just like you do today with your business intelligence stack.

From Data to Intelligence

Unfortunately, it’s not that simple, this isn’t just about moving data. It’s about creating intelligence. And that data—the data you generate—is going to shape the intelligence of the systems you adopt, deploy, or design.

A big part of that intelligence will come from something new: memory and context.

Memory is what the system retains from past interactions—what it has learned about you, your business, your preferences, and your behavior.

Context is what the system surfaces at runtime—what it knows about your current goal, environment, and history, so it can act more intelligently in the moment.

These two concepts—memory and context—are what make AI feel less like software and more like a teammate. They’re also what make data ownership far more complicated than it used to be.

The Moat Behind the Model

Because here’s the conundrum:

If your systems are going to get smarter over time, they’ll need memory.

If they’re going to be helpful in the moment, they’ll need context.

And both will be shaped by your data—data you’ve created, curated, and often paid to manage.

So: Who owns that memory? Can it move with you? Should it be reusable by vendors who benefited from seeing how your business works? What happens when your usage of AI is making your experience exponentially better?

The New Intelligence Layer in the Stack

For years, we optimized the enterprise stack for visibility. Systems of Record stored structured facts (CustomerID = 1). Systems of Engagement enabled communication, coordination, and workflow. The modern data stack pulled from both to feed analytics tools.

But AI isn’t just feeding on data. It’s forming a model of you.

It’s remembering how your team resolves disputes, how you respond to pressure, how exceptions get handled, and where decisions actually happen.

And those systems—ones learning silently in the background—don’t sit neatly in today’s stack.

They don’t just hold data. They hold understanding. And once they learn how you work, they become very good at automation.

We’ve built interfaces to export dashboards, records, and logs. But we haven’t yet built an interface to export what the AI has learned. What makes you you to the system—the implicit knowledge, the judgment, the working memory—isn’t portable (at least yet).

That’s what makes memory a very powerful feature of an AI infrastructure company.

We’ve spent the last decade getting serious about data ownership. But is this ownership the same or different? Because if intelligence becomes the output, and memory becomes the byproduct, then whoever captures it—controls a very big moat.

The Trust Layer

This isn’t a problem we’ll solve with a checkbox or a better export button. It’s going to require new thinking across product, architecture, and a lot of trust.

So whether you're building or buying AI systems, ask:

What will this system learn from us?

What does our contract allow them to retain?

Will we benefit from that memory?

And when the time comes… can we take it with us?

This isn’t just a technical challenge. It’s a trust challenge. And we’re only beginning to define the terms.

You’re going to love how much better the AI experience is when it has memory, context and access to your data but can you trust it? Perhaps this is why Facebook once let you set your relationship status to “It’s complicated.” Because this one is going to be.

Best of the rest:

🧠 Friday Forward – Idea Resistance (#487) - Robert Glazer explains how great ideas often get killed not because they're bad—but because leaders subconsciously panic about how and who will implement them. The key? Separate the what from the how and the who. - Friday Forward by Robert Glazer

🧱 Trading Margin for Moat: Why the Forward Deployed Engineer Is the Hottest Job in Startups - a16z explores why startups are embracing services-led growth—and how forward deployed engineers are becoming key to building defensible moats, even at the cost of short-term margins. - a16z

🔪 Well-Rounded Doesn’t Cut - From Derek Sivers’ Your Music and People: to stand out, don’t be well-rounded—be sharply defined. Focused, memorable work cuts through apathy. “Be sharp as a knife, cut through the pile of apathy, and make a point.” - Derek Sivers

💸 OpenAI Hits $10 Billion in Annual Recurring Revenue, Fueled by ChatGPT Growth - Just two and a half years after launching ChatGPT, OpenAI has crossed $10B in ARR—driven by strong demand across consumer, enterprise, and API products. - CNBC

🤖 The Singularity Will Be Gentle – Sam Altman lays out a calmly radical vision of our AI-powered near future—where superintelligence arrives not with a bang, but as a steady climb, reshaping productivity, discovery, and daily life in ways that feel both inevitable and astonishing. – Sam Altman’s Blog

📚 Being Useful Isn’t the Goal — Jason Cohen (of WP Engine fame) makes a sharp case that being “useful” isn’t the same as being valuable—and why over-optimizing for utility can stall your growth, your career, or your company. – Better Than Random

Charts that caught my eye:

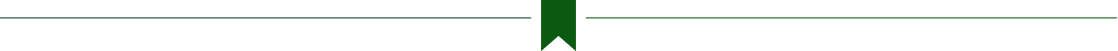

→ Why does it matter? The IPO window is creaking back open—and not just for the giants. 2025’s early slate of tech listings shows something rare: momentum. Deals are pricing above range. First-day pops are back. And most striking, the average post-IPO performance is up 122%, with names like CoreWeave, Reddit, and Circle riding secular AI and infrastructure tailwinds.

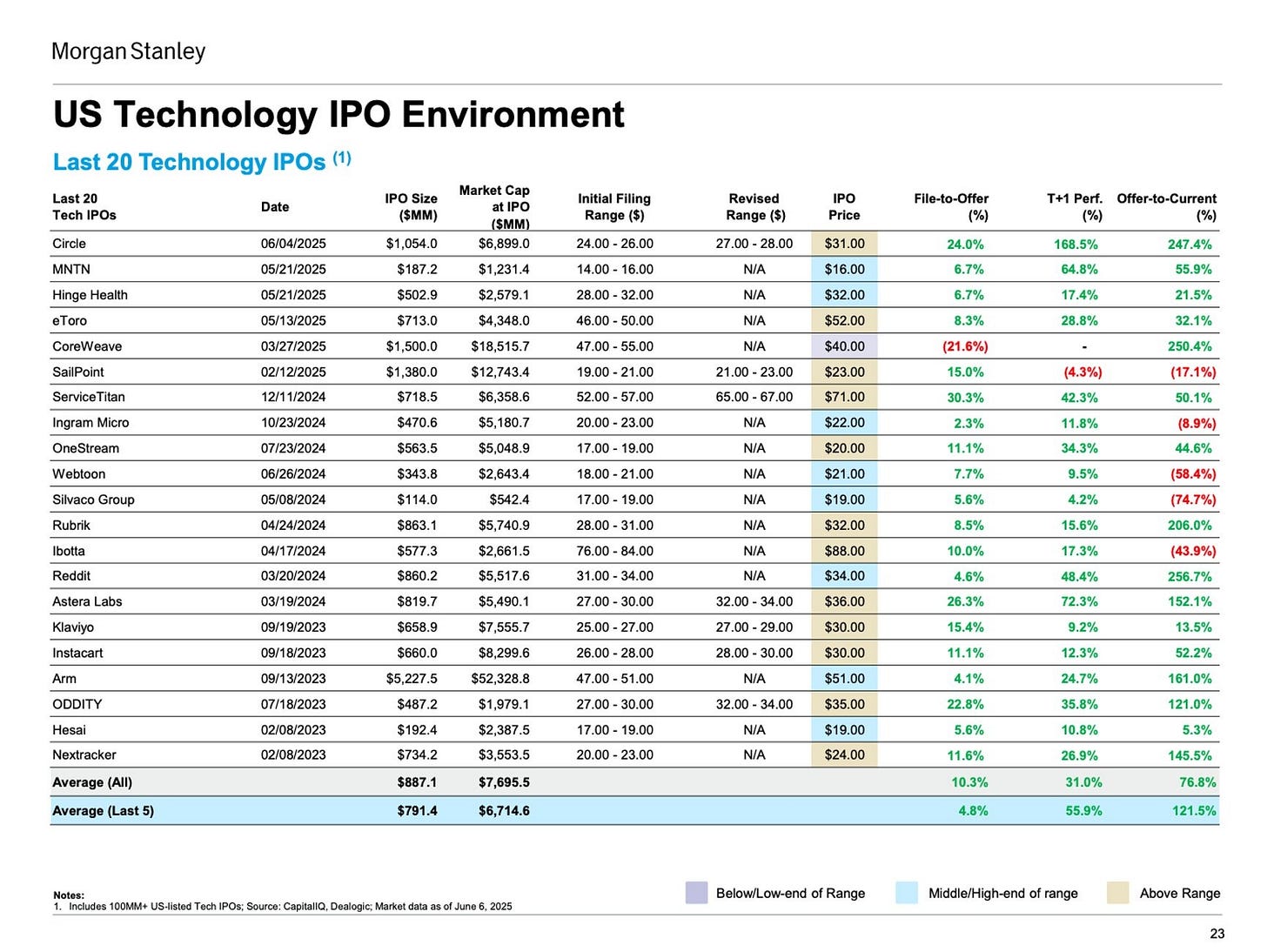

→ Why does it matter? The divergence is striking. While the Magnificent 7 have delivered exponential gains, the remaining 493 companies in the S&P 500 have moved modestly by comparison. This isn’t just a reflection of business fundamentals—it’s a function of structure. As more capital flows into index funds and ETFs, it disproportionately concentrates in the largest names, amplifying their momentum. Passive flows become performance drivers, not just followers—compounding the gap and reshaping the market beneath the surface.

→ Why does it matter? This is what exponential looks like. For 300,000 years, progress crawled. Then it sprinted. The chart makes last week’s point in pixels: optimism isn’t naïve—it’s math.

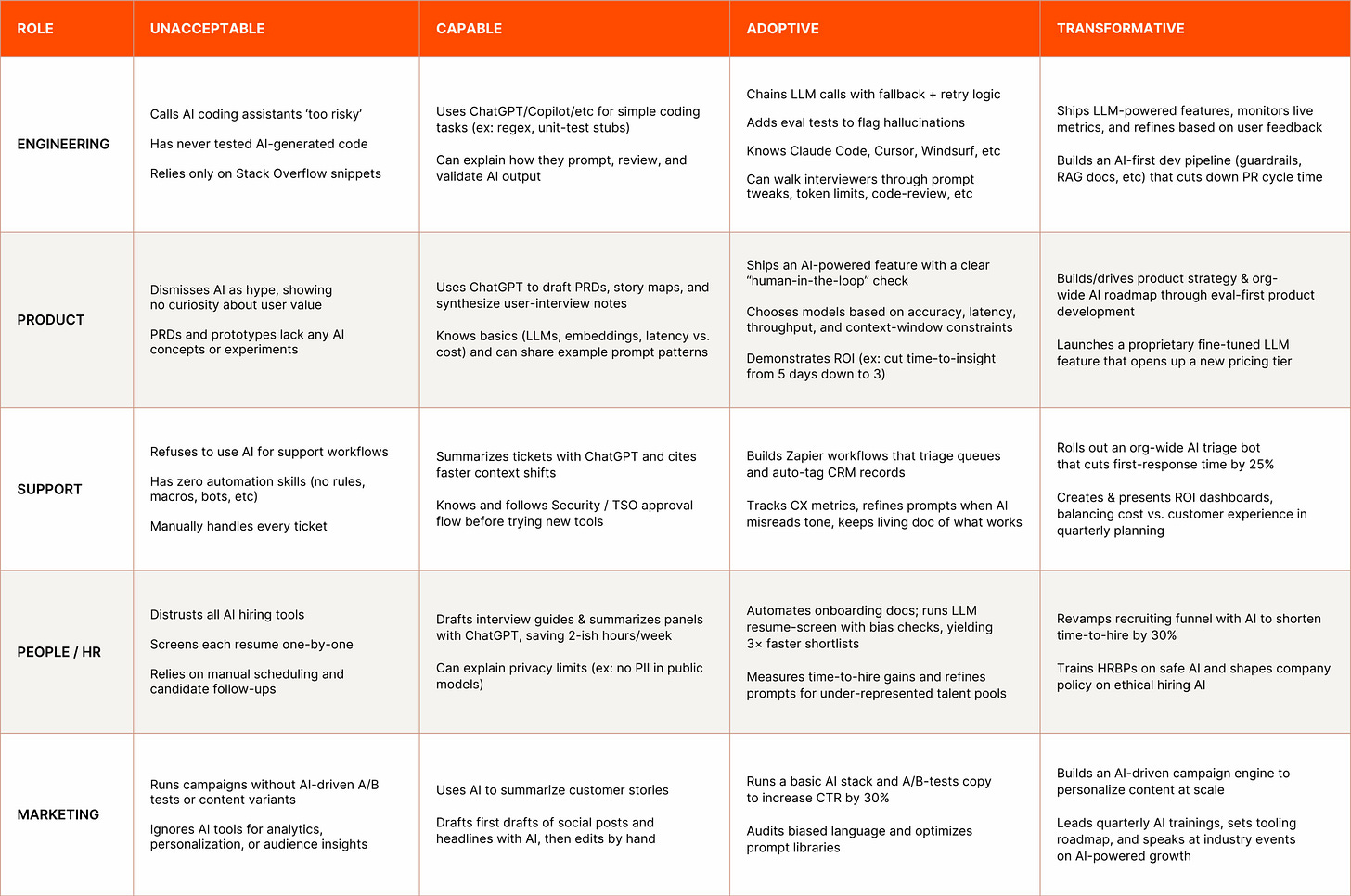

→ Why does it matter? This chart from Zapier offers a helpful lens for teams building AI fluency. It maps the shift from treating AI as a feature to making it a reflex—baked into how each function works, decides, and delivers. The real unlock isn’t just tools, but habits: shorter cycles, clearer metrics, and shared language across roles.

→ Why does it matter? Meta’s losing the AI talent war—engineers are turning down $2M+ to join OpenAI and Anthropic. The chart shows the flow: DeepMind is bleeding talent, mostly one-way. This week, Zuck struck back—buying 49% of Scale AI for $14B. Can’t hire the frontier? Own the supply chain. It’s also one way to stay under the antitrust radar!

Tweets that stopped my scroll:

→ Why does it matter? Wow! An AI that laughs—and it actually lands. Not just voice synthesis anymore, but timing, tone, presence.

→ Why does it matter? The “it” in AI is the dataset. When Anthropic pulled the plug on Windsurf, it wasn’t about models—it was about control. Slack and Reddit are doing the same: locking down data.

Worth a watch or listen at 1x:

→ Why does it matter? Agency isn't a buzzword—it’s the new locus of self-transformation. In her viral essay How to Be More Agentic and now Crossing the Cringe Minefield, she reframes personal growth as less about optimization and more about confronting the exact places we reflexively avoid. Cringe, in her framing, isn’t embarrassment—it’s a compass. If you want to level up, the path isn’t smooth—it’s squirmy. And that’s the point.

→ Why does it matter? Vinod Khosla has seen thousands of startup pitches—and funded the ones that built the future. When he gives a masterclass on pitching, one should listen!

→ Why does it matter? Nobody better than Bill Gurley to give you the state of the union on venture capital and capital markets.

Quotes & eyewash:

"A king does not abide within his tent while his men bleed and die upon the field. A king does not dine while his men go hungry, nor sleep when they stand at watch upon the wall. A king does not command his men's loyalty through fear nor purchase it with gold; he earns their love by the sweat of his own back and the pains he endures for their sake. That which comprises the harshest burden, a king lifts first and sets down last. A king does not require service of those he leads but provides it to them. He serves them, not they him."

Pressfield, Steven. Gates of Fire: An Epic Novel of the Battle of Thermopylae

→ Why does it matter? Ha! In all seriousness, I am a fan of where Apple is taking us with glass. This is a prelude to the UI/UX already available in Apple Vision Pro and where they’re going with Apple glasses and wearables!

The mission:

The Wall Street Journal once used ‘Read Ambitiously’ as a slogan, but it became a challenge I took to heart. If that old slogan still speaks to you, this weekly curated newsletter is for you. Every week, I will summarize the most important and impactful headlines across technology, finance, AI and enterprise SaaS. Together, we can read with an intent to grow, always be learning, and refine our lens to spot the best opportunities. As Jamie Dimon says, “Great leaders are readers.”